Devlog: How We Compressed a Billion Nodes into 17MB

Our story starts with a client requesting a “simple” Battle Royale minigame. That supposedly simple request sent our definitively-very-well-paid developer (Giov4) down a two-year-long rabbit hole of algorithmic problems that turned out to be anything but simple: isn’t life beautiful?

But let’s start from the beginning.

To have a great Battle Royale mode, you need a great map reset system. We already had one, battle-tested in our Skywars minigame, but it had been designed for small, floating islands and tightly scoped arenas.

This new project was different. The map was massive—about 1000 x 512 x 1000 nodes. We were dealing with a volume of roughly half a billion nodes.

This is a technical deep dive into how standard engine tools failed at this scale, how we crashed servers with 20GB RAM spikes (oopsie!), and how we eventually ended up writing a custom Octree implementation to manage the unmanageable. We’ll also pretend to make the topic funny with some pictures, like the one below. Haha, pictures!

The Failure of Standard Approaches

Our legacy reset system relied on a fairly standard approach: tracking changes. We hooked into the engine’s Lua functions and callbacks (e.g. on_place, on_dig, set_node) and simply recorded every modification a player made. When the match ended, we reversed the list.

Great, right? Hah, you dumb dumb: such approach falls apart in a complex game mode, because surprise, callbacks don’t really track everything. Enter Voxel Manipulators!

Luanti allows modders to use Voxel Manipulators (VoxelManips) to perform bulk block operations efficiently. These operations bypass Lua callbacks entirely to maintain performance, so unfortunately there is no way you can track their changes. As an example, imagine you want to cause a really big TNT explosion and you use a VoxelManip to do that: TNT goes kaboom, blocks go kaboom, oh wait we can’t track that, you realise your map is gone for good, internal screaming, 5 stages of acceptance 1 per second, reevaluation of your life goals, go back to your childhood, find a comfortable thought, you remember your map is still gone for good, WE’RE HAVING FUN!

Even worse were liquids: flowing lava updates the map but doesn’t trigger a set_node event, so… yes, again, no way to track it. Your map is now a simulation of Venus, you’re welcome.

The Memory Wall

Since we couldn’t trust the callbacks to catch everything, we thought: “Let’s just save the area beforehand and restore it when the game ends.” Here we meet our friends VoxelManips again, because the standard way in Luanti to read a map area is using a VoxelManip object. This reads the map data into a flat array in memory: for small areas this is blazing fast. For a billion nodes, it’s… quite an experience. When we tried to load the full arena into RAM to create our snapshot, the server’s memory usage skyrocketed to something like 20GB, freezing the server.

We thought of partitioning the map into smaller chunks — saving them as standard schematic files on disk — but we would have hit an I/O bottleneck. Saving 100k+ small files would have taken forever. On the contrary, saving fewer, larger files would have brought us back to the memory problem: to write a large schematic, you first have to load that large area into RAM. It was a deadlock. We needed a way to store a massive, sparse dataset in memory without the overhead of a flat array.

Now, it might be possible that we might have lied about the definitively-very-well-paid part. So in the end for the Battle Royale we decided to use the original Skywars system, paired with custom unefficient but compatible implementations of liquids and TNT nodes.

Until…

…Giov4 decided to hate himself. He spent two years working on a solution for this problem during his free time, instead of having fun, doing drugs or, seriously, anything but this. And what was the solution? Abandon the engine’s default storage formats and implement a custom data structure: an octree.

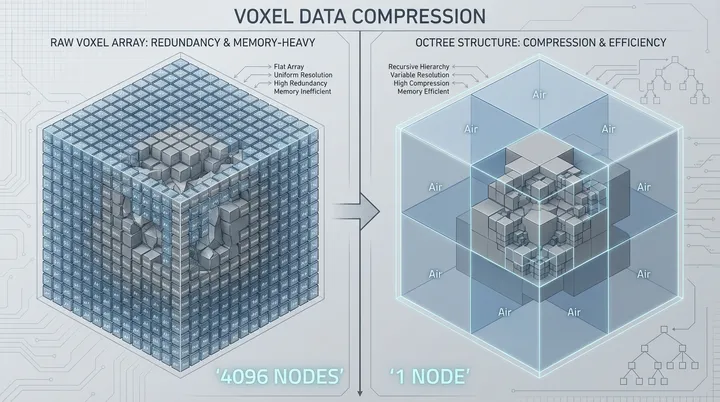

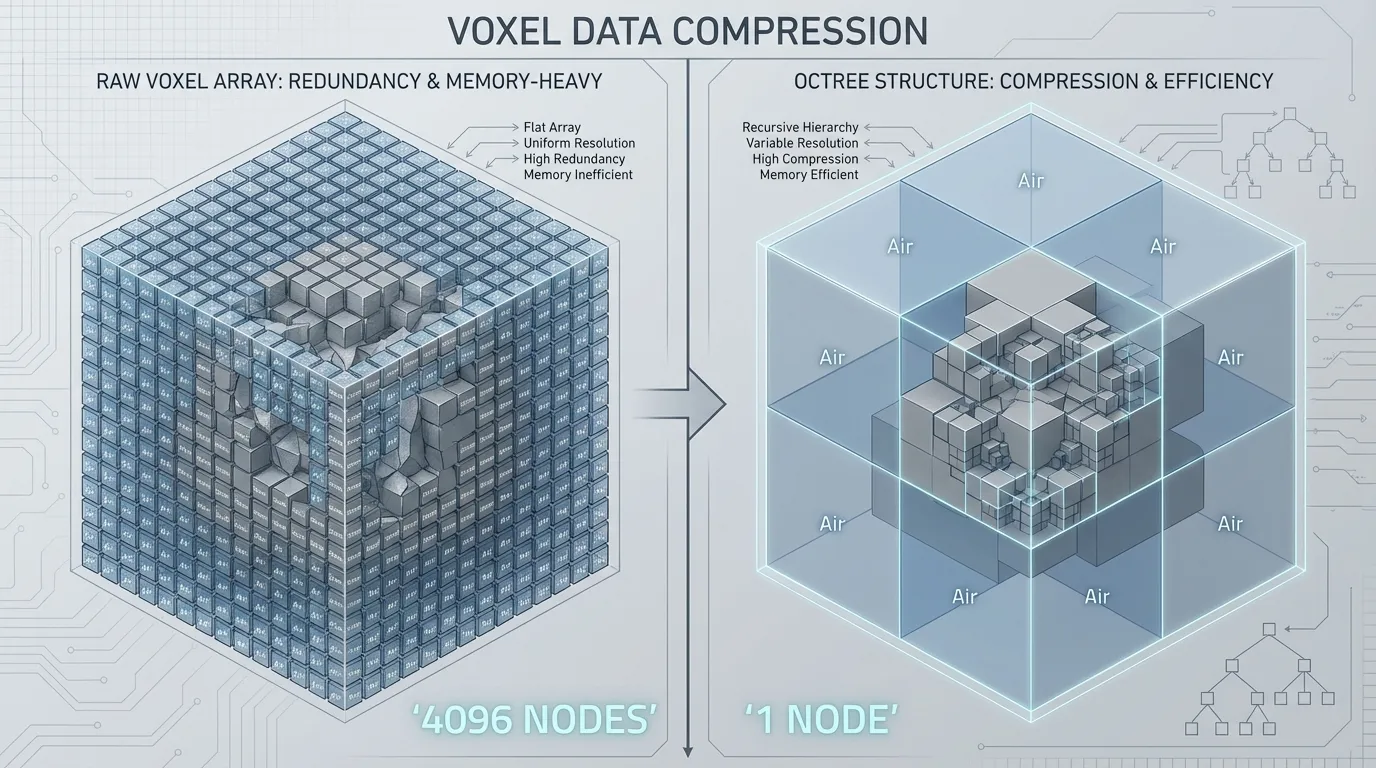

Voxel worlds are highly repetitive: a 16x16x16 chunk of the sky is usually 4096 nodes of air, a chunk of the underground is often 4096 nodes of stone, and so on. A flat array just stores 4096 entries for both. An octree, instead, is recursive. If a subdivision of the tree contains identical nodes, it collapses into a single leaf. That meaning: a 16x16x16 chunk of air doesn’t take 4096 memory slots anymore; it takes one.

Unfortunately all the octree graphs out there suck and all this is made in our free time. So yes, this is AI made.

But simply using an octree wasn’t enough; we had to implement several layers of aggressive compression to get the footprint down. Big nerd time incoming.

Dominant Node Optimization

When the system scans a chunk, it calculates the most frequent node. If a chunk is 80% stone, 10% dirt, and 10% air, we don’t store the stone. We set “Stone” as the default value for that specific tree node and only store the exceptions. Then, if a generated octree matches the global default of the map (usually air), it is discarded entirely and the default value is read from the map itself. In a map that is 50% sky, this basically slashes the memory usage in half!

ZSTD Compression

Once the octree is built, it is serialized into a string and compressed using ZSTD. We are basically constructing the map by streaming compressed mapblocks!

The results were absurd: a 1 billion node map that required almost 20GB of RAM in a raw format was compressed down to just 17MB on disk. In RAM, when fully loaded but compressed, it occupied roughly 30MB. That’s ~1,205x smaller on disk and ~683x smaller in memory!

The Lazy Memory Model

Unfortunately compressing the map is only half the battle. You still need to read it — as démodé as that may sound. If we decompressed the entire map into a flat array, only to look up a single node, we would loop back to the initial problem.

The solution was a Lazy Loading system backed by an LRU (Least Recently Used) cache. The map stays compressed in RAM and when the game queries a specific location:

- We identify which compressed “blob” (chunk) contains that coordinate;

- We check the LRU cache;

- If it’s a miss, we decompress only that specific chunk, parse the octree, and cache the result;

- If it’s a hit, we query the cached tree.

The cache size is limited to 5% of the server’s max memory budget. However, because the decompressed octree structure is so lightweight, filling this budget is difficult. In practice, the cache is almost never emptied; once a chunk is loaded, it stays resident until the server restarts. Combined with the fact that players naturally congregate in specific zones — ignoring the map’s extremes — we rarely encounter a cache miss, bypassing the decompression pipeline entirely!

Asynchronous Architecture

Nice, we are finally don- No.

Luanti is single-threaded. This means if you try to process 1 billion nodes on the main thread, the server will hang for minutes. Our system had to be entirely asynchronous.

Reading: we can’t access the map directly from a worker thread. So the main thread grabs raw map data in manageable batches (via VoxelManip) and dispatches them to a pool of worker threads. These workers handle the heavy lifting — constructing the octree and running ZSTD compression — in isolation. Nice job, worker threads!

Writing: restoring the map is harder because we must write to the world on the main thread. To prevent lag, we implemented a Time Budget system (defaulting to 50ms per tick). The system processes the restore queue in small steps, checking the clock after every batch. If the budget is exceeded, it yields and resumes in the next frame. Simple but effective.

Auto-Tune: determining the right batch size for each server — how much data to grab or write at once — is very important. If the batch is too big, memory spikes; too small, and overhead kills performance. We built a tool that benchmarks the saving pipeline on the live server. It empirically tests different batch volumes to find the “sweet spot” that fits the server’s hardware limits, ensuring that neither reading nor writing operations ever trigger a stutter. Perfect for potato servers as well as computers with RGBs.

The Surgical Restore

Finally, we had to solve the actual reset. Even with efficient storage, re-writing half a billion nodes takes… time. We don’t want the player having to wait for minutes after every match (especially not in the era of TikTok attention spans — oh, look, a squirrel!).

So we went back to tracking map modifications, but in a smarter way. We used on_mapblocks_changed, a recently-introduced engine callback that notifies mods about all mapblocks modifications.

When a chunk is modified, we mark it as “Dirty” (🫦), while in the background, we verify this change against our compressed reference. If they match (e.g. a false positive triggered by the engine emerging the chunks), we discard the entry.

If they differ, we schedule it for a restore. When the match ends, we don’t reload the whole map. We only overwrite the specific chunks that are actually dirty!

So, uhm… What a journey.

If you made it this far without your brain melting from all the “octree” and “VoxelManip” talk (contrary to Zughy, during his proofreading): congratulations! You deserve a cookie. 🍪

Basically, what did we learn today? We learned that Lua is actually pretty capable if you treat it nicely. Sure, we miss C++ sometimes (who doesn’t love a good ol’ segfault?), but proving that you can solve “hardcore” engine problems just by writing smarter Lua scripts was… oddly satisfying.

It wasn’t a solo trip, though. AI helped us generating a massive suite of 69+ tests and keeping the docs tidy from day one. Well aware of the nature of the majority of these tools, we hope to have used them for the common good (the octree mod is free software, AGPL 3.0) as a way to accelerate what was already in our minds but that on our own it would have taken us months and months - we only rely on donations and we’re not a company.

Anyway, thanks for reading our technical ramblings. We’ve got more stuff coming in the future, equally painful but hopefully equally fun.

If you want to help turning our definitively-very-well-paid developers into definitively-incredibly-well-paid ones, take a look at our Liberapay :3

See you around! ❤️